The Three Pillars of TULIP

- Fast: Explanations are generated on-the-fly, enabling real-time decision support

- Interactive: Users can explore, question, and eventually contest AI decisions through intuitive interfaces

- Cognitive-based: Explanations are tailored to non-expert users, using familiar concepts and visual representations

Two phases: experimentation and production

As experiment: Evaluation of XAI presentations is very expensive to do. I am designing and developing a software platform that will enable experiments with real people. The aim is to: understand how their mental model aligns with ML model; the “actionability” of the XAI presented and any variations in trust arisen by the usage of this system.

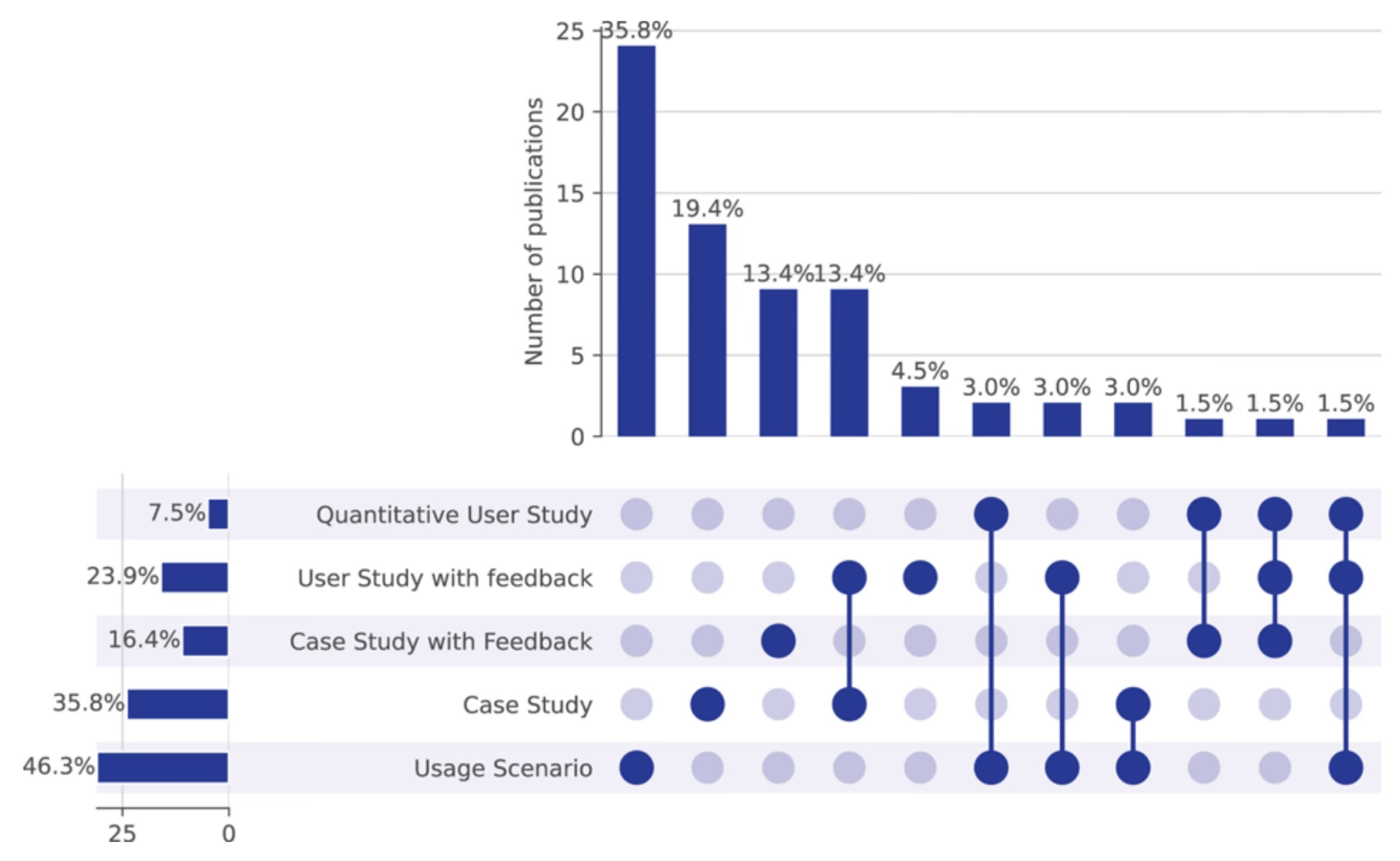

This graph shows that when presentations of explainability are evaluated, the evaluations are usually conducted with an usage scenario, the weakest of human evaluation experiments.

In production: Traditional XAI (Explainable AI) tools are designed for ML experts. TULIP bridges the gap between complex ML models and the domain experts who use them - doctors, lawyers, loan officers, and other professionals who need to understand and trust AI decisions in their work.

Explorable explanations

The interaction panel demonstrates the ability for users to explore AI decisions based on their domain knowledge and is one of the key factors of interest: whether providing such ability improves understanding outcomes.

Context

This demo is part of Fabio Michele Russo's PhD project on user-centric explainable AI at IMT School for Advanced Studies Lucca. My goal is to develop new approaches to explainability that prioritize the needs of end users over technical sophistication.

For more information click to read my ECAI 2025 Doctoral Consortium paper →

Visit my scientific portfolio and/or email me →